My2Voice - Even if I don't speak, I can talk

An app not only for the completely non-speaking.

Petr Novák has been supporting people with various disabilities, especially people with limited mobility and vocal disabilities, for more than 10 years. He has been involved in several European projects focused on the development of assistive technologies and has also created applications for various home rehabilitation.

Vocal disabilities can only temporarily affect otherwise healthy people, for example after vocal cord surgery or stroke. Most often, however, it is related to a physical or mental disability. Speech problems can also be experienced by people in old age who can no longer be understood well. There is no application (in the Czech language) available on the market (in the Czech Republic) that would sufficiently cover the requirements of this group of people in their daily communication with the general environment.

The My Second Voice app tries to help all these groups of people to communicate with their surroundings. It respects the different needs and, of course, abilities of individual users. This means that there is a wide range of possibilities to enter content to be communicated by speaking, from simple pictures to complex pre-made phrases and sentences. The application allows it to evolve gradually with its user. A large potential group of users could be various kindergartens and schools for teaching children with various communication problems.

What problems does it solve for its customers?

Speech is a very important ability for a person for easy and especially quick daily communication with the environment. It is therefore a way of communicating a really large amount of information quite easily and quickly, even to several people at the same time. Those who are partially or even completely deprived of this ability have, of course, very difficult contact with the outside world.

The My Second Voice application was created to help these people, running under the most widely used operating system for mobile devices, Google/Android. Users can use it on a wide range of small mobile phones or larger tablets, depending on their current needs. Text-to-speech is used directly from the operating system, so there is no need to purchase anything else.

The app, called My Second Voice, has two main goals:

- Combining several basic modes of communication into a suitable whole.

- To allow the user not only to customize the application as much as possible, but especially to create it completely according to their own needs.

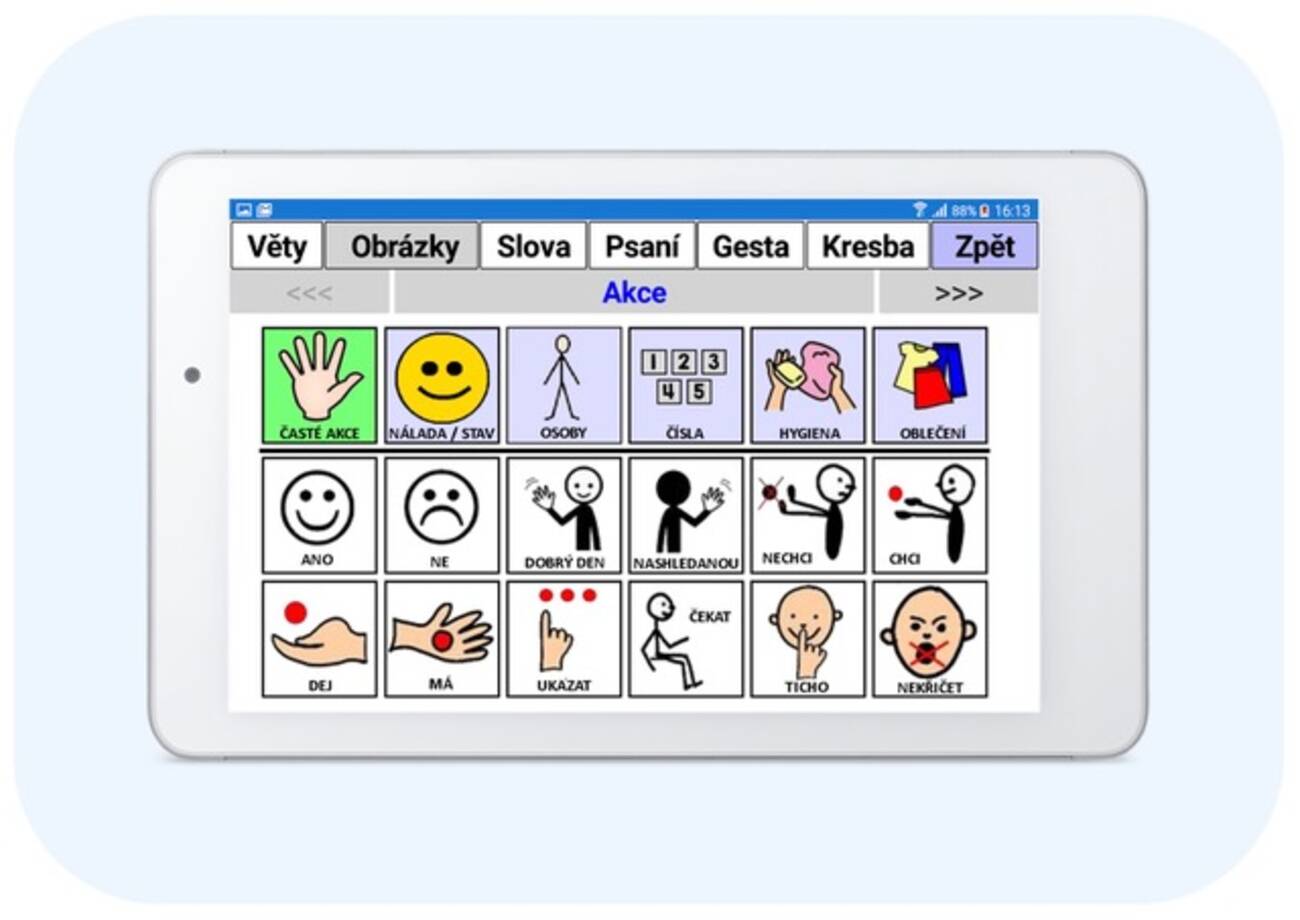

The My Second Voice app provides several ways for everyday communication with the environment:

- Sentences / phrases - Pronounce pre-prepared texts by touching them.

- Pictures - Pronouncing arbitrary texts (words/sentences) associated with pictures.

- Words / short sentences - Constructing simple sentences from a list of prepared words.

- Written text - Writing your own text (letter by letter) and reading it as required.

- Gestures - Pronounce words/sentences according to the path of finger movement on the screen.

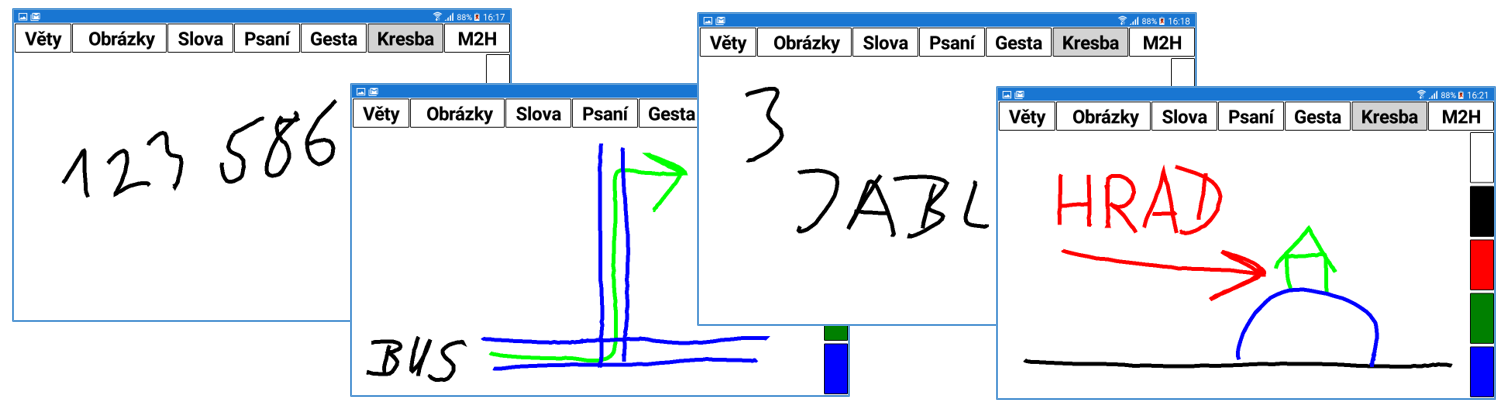

- Painting - Ability to quickly paint anything with your finger.

The content of the application, i.e. not only all displayed texts/pictures, but of course also spoken texts, can be edited and created completely according to your own needs. In many cases, the display and behaviour of the entire app can also be highly modified (even directly on a tablet or mobile phone). It is also possible to share and download content to the app from the WWW.

Examples of application use

Users with severe speech impairment but good mobility, who have no problems typing quickly - will primarily use the conversion of written text to speech, with some help and prediction.

Pupils and students, and in fact all users, will often make use of a list of pre-written words and sentences at a touch, divided into different headings (shopping, school, home, ...) in situations where it is not possible to wait for manual text input (buying a bus ticket).

Users whose more severe physical disabilities do not allow them to enter the text letter by letter will benefit from the quick selection of predefined words in columns according to different headings. This allows them to quickly construct different sentences according to the current situation.

Elderly people with advanced dementia, for example, will use picture communication - people look at familiar icons to realise their meanings and communicate with their surroundings by selecting pictures in turn.

The possibility of converting "movement gestures" into spoken "sentences" is also created. By moving a finger over a suitable picture, a lot of information can be spoken, examples for a picture of a person:

- I touch my head, move my finger to the left - "look to the left" is spoken

- I touch my shoe, move my finger outside - say "take off your shoes"

- I touch only my head - "look at me" or "listen to me" is spoken

By preparing your own text or image file, My2Hlas can be used for completely different purposes:

Hospitals - People after vocal cord surgery can easily answer doctor's questions from prepared pictures/text.

Teaching children with various speech and language disorders.

Inserting picture rhymes/communication symbols - Teaching children how to construct sentences using, for example, communication symbols.

And more according to user ideas. Such possibilities are not provided by existing apps and My2Voice on a phone or tablet can therefore be a great help to non-speakers in many everyday situations

Product development phase

The application is currently in test mode and therefore only available to a limited/selected number of users with basic technical knowledge to provide feedback.

There will be a test mode for a few months, after which the application will be available for purchase directly from CTU.

Detailed specification

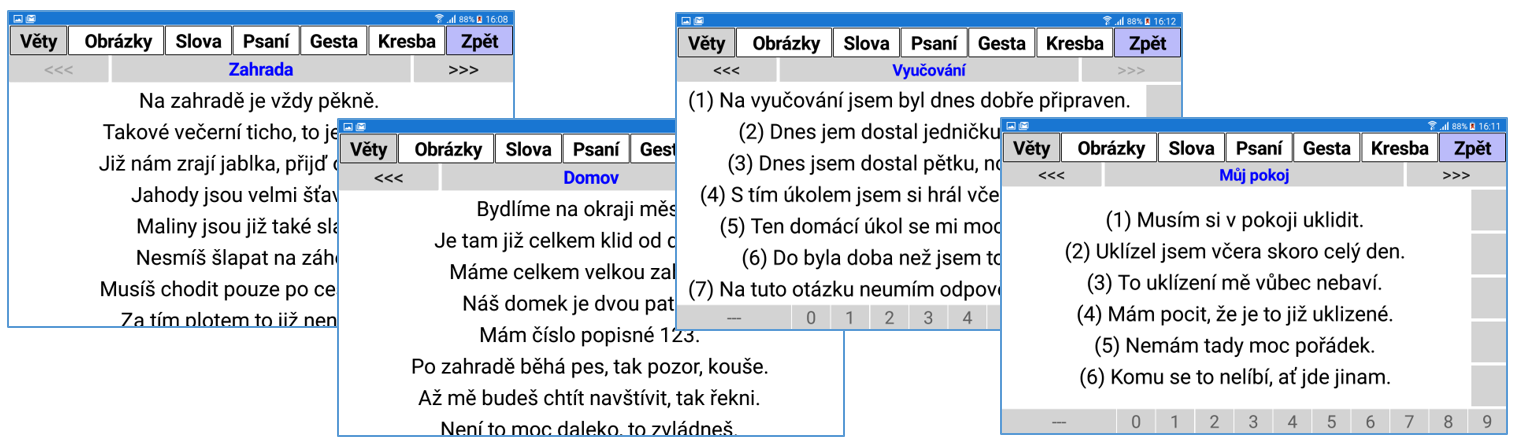

The most basic mode is "Sentences/Phrases". It is a set of sentences or phrases, i.e. word phrases.

- They are displayed as a list that can be scrolled (up/down) and by touching the text with your finger it is spoken, i.e. converted into spoken language.

- It is possible to enter a different text to display (shorter) and a different text to say (more).

- The texts can be sorted in the list according to priority and at the same time coloured, for example to express mood (quick finding).

- They are arranged into themes and then into groups that can be easily switched between. For example, you can define the topic "School" and within it the groups "Mathematics" and "Geography". In this way, the "Sentences/Phrases" can be suitably divided for use according to the current situation, thus limiting the length of the lists displayed and thus increasing the speed of finding the desired text.

- At the same time, the "Sentences/Phrases" can be numbered and a quick navigation (numeric or bold) can be used, especially for longer lists.

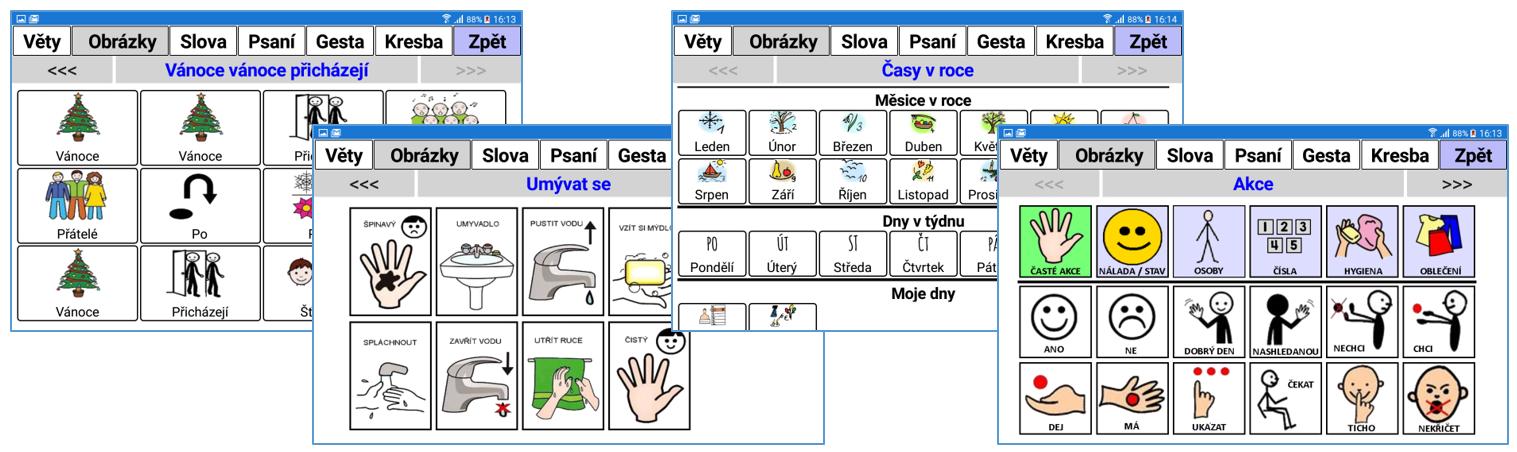

The second mode is "Images". This is basically (mostly) a matrix of arbitrary (smaller) images tied to text spoken when the corresponding image is touched.

- A picture does not have to represent just one spoken word, but a whole sentence or even a much longer text.

- In most cases, these are very widespread so-called "communication pictograms" used to express not only a need, desire, request, but also a mood.

- However, communication pictograms are not everything. In many situations you need to create your own pictures, for example, according to your surroundings: my room - "I am going to my room", a photo of a friend - "I am going to visit ...", etc. You can also use pictures of family members, for example: a picture of grandma with a question mark - "where is grandma".

- The pictures are also arranged into themes and further grouped within them. It is possible to specify pictures to switch between these groups and thus create a quite comprehensive and sophisticated set of pictures / pictograms for quick understanding in many everyday situations.

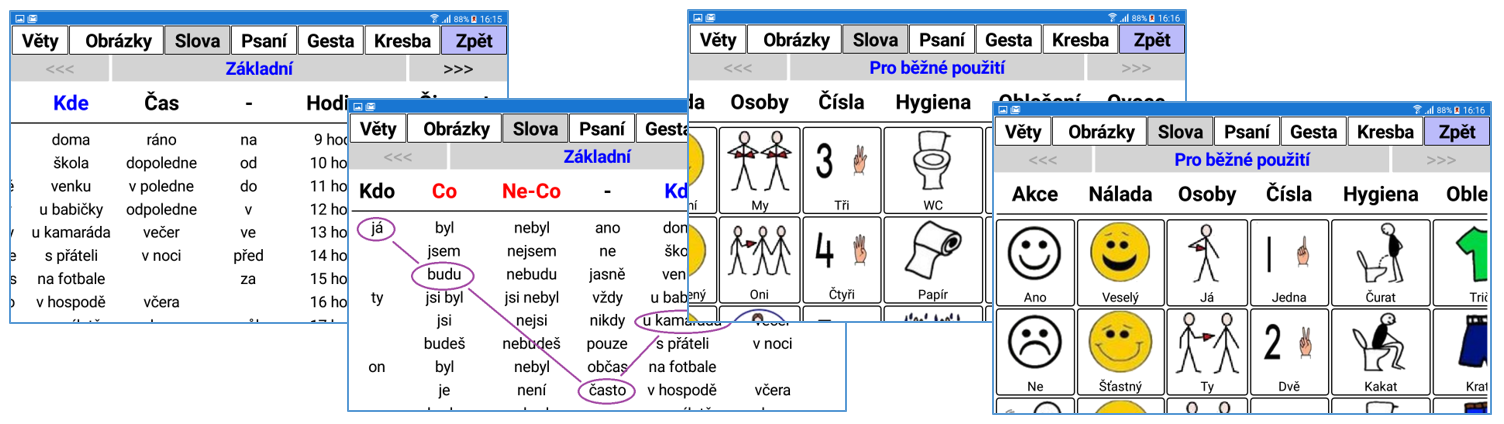

The third mode is "Words". This is a way to quickly construct simple and therefore concise sentences using pre-made word lists.

- The words are displayed in columns, the first column containing, for example, pronouns (I, you, he, ...), the second sentence tense (was, was, were, am, will, ...), the third time of day (morning, noon, evening, ...), the fourth place (home, school, ...), etc.

- There is no limit to the number of columns and the number of words (but with respect to clarity). Thus, one can select (one) word from each column in turn by touching it.

- Each selected word is read immediately (in the current version) and thus does not wait for the whole sentence to be selected (but it is ready). Thus, a short sentence like "I - will - be - home - tonight" can be constructed very quickly word by word, which is much easier than typing it.

- Of course, it is not necessary to select a word from each column, for example for the questions "Where will you be tonight?", only the word "At home" can be selected. So word lists can also be used just for quick answers to time or place questions.

- Instead of words (made of letters), (small) pictures can be used, for example suitable communication pictograms, and the sentence can be constructed by selecting them one by one. This can be a great advantage for young children (not yet proficient in writing/reading), or people with limited reading/writing skills.

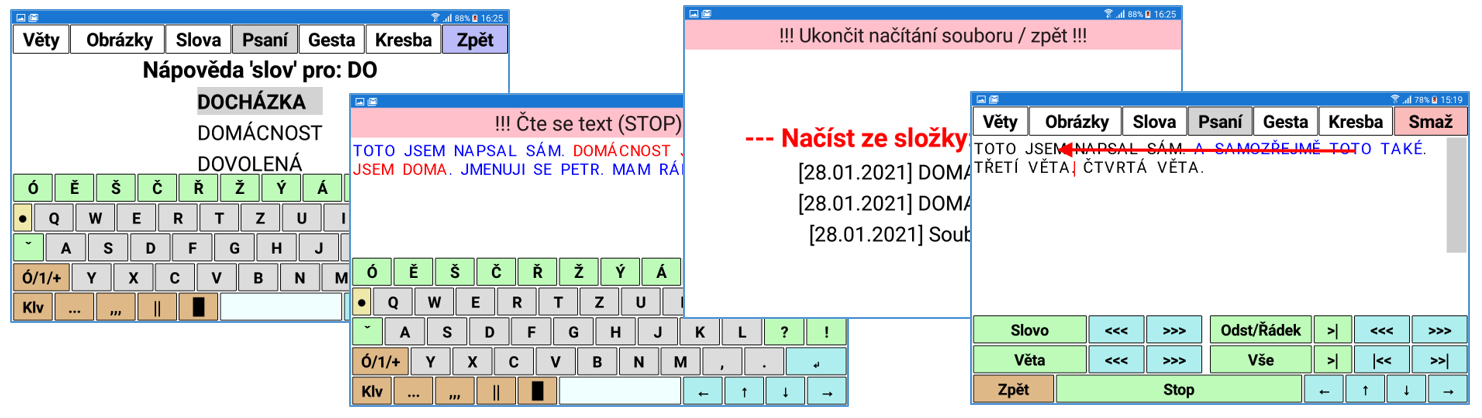

The fourth mode is "Writing". There is always a need to pronounce some text that is not prepared in advance. Using the pictorial letter keyboard, one can write basically any text and then have it spoken. Although it is a simple typing system, it provides several useful conveniences.

- The written texts can be saved in a file and can be viewed and used at any time later (for homework, essay exercises).

- The possibility to store written text in up to five quick (working) memories for easy/quick recall. For example, preparing several answers in school lessons.

- To speed up writing, there are "tweaks" like custom shortcuts that expand into longer text (type "HAY", press the button and the text "How are you?" appears. Also, there are pre-made words and sentences (I type the first letters, bring up the list and select the word/sentence).

- The reading options, i.e. converting typed text to speech, are completely unique. There are two options: using the image keyboard, or by marking the text with a finger stroke.

- You can use the keyboard to move around words, sentences or paragraphs in the text and also have that part read at any time. When reading, the cursor moves behind the text you have just read, so you can start the next (part of the) reading or repeat anything.

- The text can also be read by marking it with a finger swipe on the display. In short, by dragging your finger, you can colour-code a part of the text (word, sentence, paragraph) to be read and it will be spoken. The reading progress is of course colour-coded.

The penultimate and completely unique mode is "Gestures". Here you can define basically arbitrary routes of finger movement on the display and each of these routes is assigned a spoken text.

- There is a background image on which the markers (locations) are created. The desired sequences of markers are assigned words or sentences to be spoken.

- If the user swipes over certain markers in the image, the word/sentence associated with the sequence of these markers is spoken.

- In this way, pre-made sentences can be created and then recalled in many variations. An example might be an explanation of a subway journey (station names, changes, ...). The notation for gestures can be made completely custom (picture, sequences, words/sentences).

The penultimate and completely unique mode is "Gestures". Here you can define basically arbitrary routes of finger movement on the display and each of these routes is assigned a spoken text.

- There is a background image on which the markers (locations) are created. The desired sequences of markers are assigned words or sentences to be spoken.

- If the user swipes over certain markers in the image, the word/sentence associated with the sequence of these markers is spoken.

- In this way, pre-made sentences can be created and then recalled in many variations. An example might be an explanation of a subway journey (station names, changes, ...). The notation for gestures can be made completely custom (picture, sequences, words/sentences).

When reading any text, there is a big red button at the top with information about the reading in progress. This serves not only to stop/interrupt the reading, but more importantly is an indication to the hard of hearing person whether the desired text reading is actually in progress and how long it is taking (before starting the next one).

The possibility of adding content according to your own needs

Probably the biggest advantage of the app is the possibility to edit or add basically all texts/images completely according to your own needs. The app actually works as a "muster/interpreter" to display/act according to some specified configuration.

- The filling is thus created in the form of (simple) text files containing information about what is displayed where, or even how it is displayed, and what texts are converted to spoken language when what action is performed.

- Basic files (sentences/pictures/words) can be created even by a person with minimal computer knowledge (it is only text). Of course some files, for example gestures, are a bit more complex.

- The created application fillers (e.g. by user type) can be placed on the WWW and users can download them as needed/selected. These can be separated on the WWW by creator/author name for clarity, and include conditional access (passworded, for example for special use). Of course, only one file can be downloaded from the WWW for image communication, for example, by the selected author.

- For example, content needed/prepared for teaching can be placed on the WWW to allow students to download communication images used in teaching in a particular school.

Many of the application's capabilities are hidden in its settings

Not only the content, but also the appearance of the application can be highly customized to the needs of the user.

- Setting basic parameters such as text sizes/colours, spacing between sentences when reading or hiding modes that are inappropriate/complex for a given user can make the app much clearer.

- Setting the style / touch mode. Thus, it is possible to specify how long an item (text, image) needs to be held to read it, thus eliminating unwanted hand shaking of the user. For large hand tremors, a mode can be set where the finger can only scroll across the display (without lifting it) and only pause on the desired item for a specified time.

- The setup is very complex (number of items/capabilities) and ever expanding. Thus, not only the content but also the customization of the app is a very important part of its appropriate use and extension.

Improving the use and working with the app

In many cases, the sound/voice coming out of the tablet/phone may not be loud enough to be heard well in the surroundings, especially in city traffic. Any wireless battery powered speaker can be paired (via BlueTooth) with the tablet/phone, for example, hung around the user's neck. This will not only noticeably amplify the voice produced, but at the same time the sound/voice will come out closer to the user's face, giving a much better impression of who is actually speaking.

If, for example, homework needs to be written, i.e. longer text, a suitable wireless keyboard paired (via BlueTooth) to a tablet/phone can be used. This can also be suitable for any school teaching, especially for writing homework.

Upcoming application capabilities

There are always situations where an application cannot be used as easily as originally intended. Therefore, the application is still being developed and some additional capabilities are in the pipeline:

A very difficult situation occurs, for example, in an unpleasant outdoor environment when a person is wearing a winter jacket and has warm gloves on their hands. It is very difficult to operate the touchscreen application on the phone. Mobile phones now contain a so-called NFC (Near Field Communication) reader/detector and so-called NFC stickers can be purchased very cheaply. These can be conveniently sewn / stuck on clothes, for example on sleeves. An application on the mobile phone automatically detects its attachment to the NFC sticker and is able to utter a pre-set phrase / sentence. In this way, phrases like "Can you help me get on (the BUS)" can be prepared for a non-speaking wheelchair user. Alternatively, "I would like to buy this (pointing with the other hand to ...)" in a shop. NFC labels can be stuck on anything, such as a bag, or even directly somewhere on a wheelchair (but not on metal). There can be any number of labels the user needs. Thus, one can react very quickly to a situation.

This style of fast communication can also be used in the home environment. Imagine NFC tags stuck on the fridge or anywhere around the apartment with texts such as "Need to buy milk" or "Running out of orange juice again". While it is necessary to carry a mobile phone at all times (to place it on the tag and speak the text), but for these people this can be taken for granted.

It will (perhaps) not be forgotten that there are people who simultaneously have some major limitation of mobility, i.e. especially people with a fine motor disorder, for whom a precise touch on the display is unmanageable. The application can be run on any Google/Android Mini-PC (e.g. multimedia centre) connected to a regular monitor or TV. Several options can be used to control the app (with a USB/BlueTooth connection): a set of up to five buttons under the fingers, a (small) joystick like on a wheelchair, or a motion sensor placed on the wrist, for example. In this way, all modes except painting are available in the app, but some are of course somewhat limited. Using the external control, you can therefore move around sentences, pictures, or write your own text and have it spoken. In this way, the app can be used by people not only with speech, but also with movement limitations.

Are you interested in this technology?

If you are interested in our technology, products and services, please contact the Technology Transfer Coordinator by phone or email: